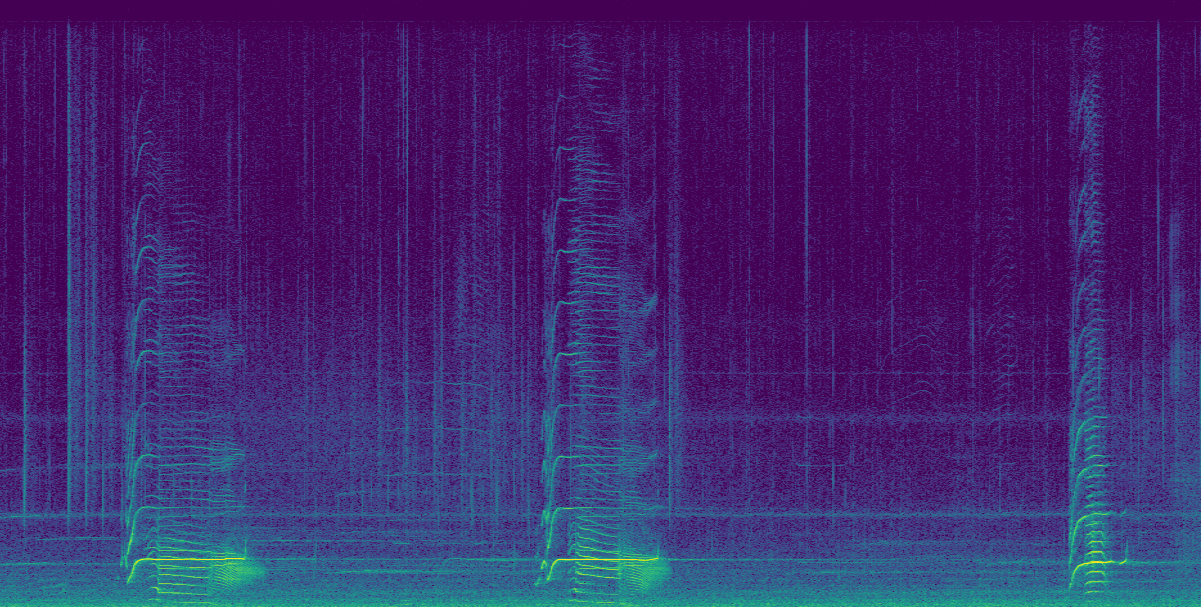

Whale acoustics is a fascinating subject that explores the vocalizations and communication methods of these creatures. Whales predominantly use sound to communicate, navigate, and interact with their environment. This acoustical signaling plays a crucial role in their survival, from locating prey to maintaining social connections within pods. Through the study of whale acoustics, we are able to gain a deeper understanding of their behavior, social structures, and even migration patterns. This field of research relies on a growing number of underwater listening devices ranging from stationary hydrophone deployments to autonomous gliders that covers vast areas to capture the sounds produced by whales.

However, the sheer volume of data being collected through these passive acoustic systems easily exceeds the capacity of researchers to manually analyze these recordings for relevant vocalizations. In response to this challenge, recent years have seen a surge in the use of artificial intelligence systems designed to automatically recognize and classify various whale vocalizations from acoustic data. These systems not only have the potential to outperform human analysts in terms of accuracy but also significantly accelerates the analysis process, enabling efficient processing of large volumes of data that would otherwise be unfeasible. As a result, marine researchers can redirect their focus towards more complex aspects of whale behavior, ecosystem dynamics and conservation measures, leaving the labor-intensive and tedious recording analysis to advanced AI tools.

In the HALLO project, our team is interested in developing AI systems powered by deep learning, an advanced subset of machine learning. Deep learning involves the construction and utilization of deep neural networks – complex algorithms that have been outperforming traditional machine learning methods in various fields. These models “learn” a particular task – in our case, identifying whale vocalizations from acoustic recordings – by being exposed to vast amounts of examples (data). In addition, these models can learn and improve over time by seeing new data. This self-improvement characteristic make these algorithms particularly powerful for tasks such as classifying whale sounds in complex and varied acoustic soundscapes.

Our team is developing a whale forecasting system that will leverage the acoustic detections made by our deep neural networks to predict the future locations of whales. The aim is to create an effective warning system that helps prevent ships from colliding with endangered orcas off the coast of British Columbia. More details on this whale forecasting system can be found here. Meanwhile, you can explore some of the models our team has developed on the applications page or in our GitHub repository.